Linuchan

Dabbler

- Joined

- Jun 4, 2023

- Messages

- 27

Over the past year, I've had a good experience with the Truenas Scale.

Good data stability, adequate performance. It utilized six 14TB SAS HDDs and other SLOG disks, and operated satisfactorily under 10G to 40G network configurations.

This time, I have configured a new system to configure all-flash storage, which I have been interested in and targeted for a long time.

I left the rest of the settings as the default.

And the result is an unknown slow reading speed.

I'm not interested in benchmarks, so it's not a matter of that outcome.

I just replicated from the existing HDD-based Truenas system to the new all-flash system, and i are looking for the cause of this, showing 50 to 100 MB/s per second to read data from the client equipment after replication.

The client communicates with the system through a 10GbE NIC, and writes at a virtual maximum bandwidth of 10GbE.

Yes, it's about 1Gb/s per second and I know this is the result of write caching in RAM and NVMe's strengths working fantastically.

Now to find out why all-flash pools are slow to read, I spent about four days searching communities like Google and Truenas Forums, Reddit, etc., trying to find and analyze the cause, but I couldn't find it.

Search for data and cases for approximately 4 days and list possible causes.

1. ARC Issues

2. Low capacity RAM

3. Inconsistent ZFS and NVMe configurations

4. Cursed.

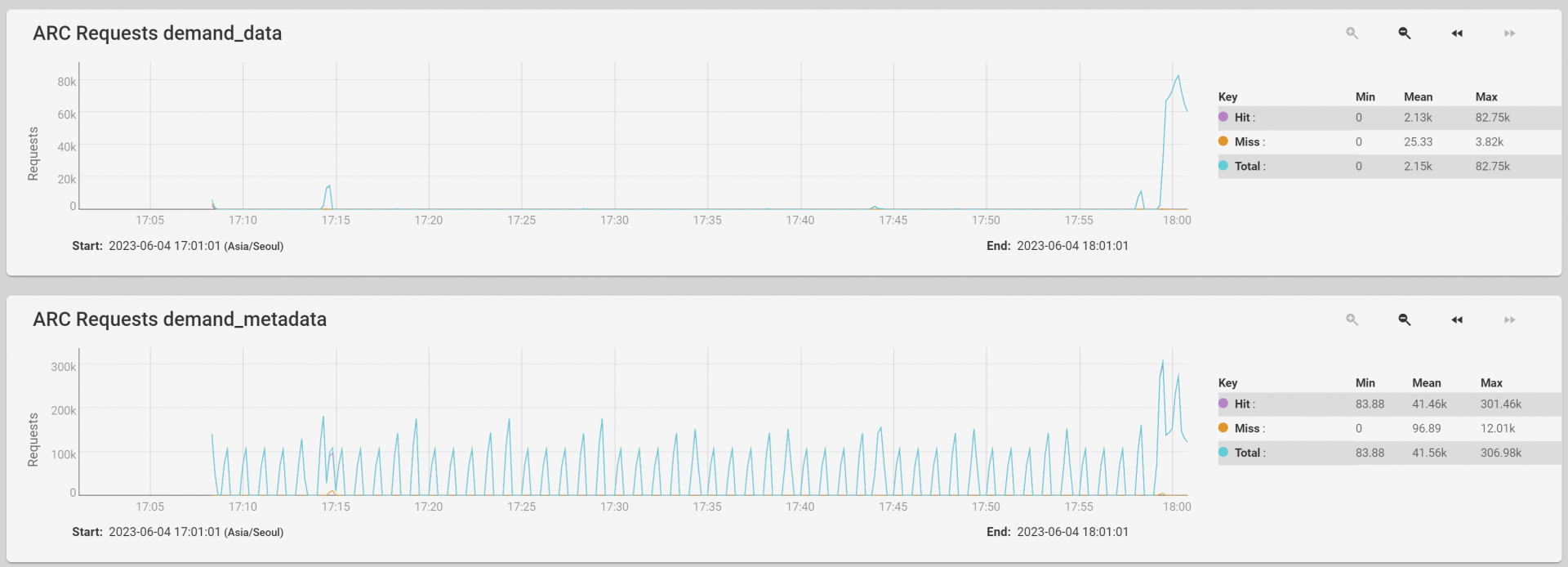

First, in the case of ARC, i checked that the hit ratio dropped during the reading operation. (Check on right end of graph)

And as expected, ZFS doesn't seem to be actively reading disks.

I tried to apply the Autotune function provided by Truenas, but it was not improved.

The test was to copy approximately 60GB of Zip files from the Pool to the client, and nothing else was done.

Actually, the system was originally installed on 'Scale', but when there was a problem with the reading speed, i reinstalled with 'Core'.

But I feel hopeless that the problem remains the same.

I using SMB and iSCSI. Both have the same problem.

I know that my configuration is lower than the usual enterprise-level specifications.

But most of my workloads are just storing cold data in a pool, sometimes browsing videos and photos.

I think it would be more appropriate to describe it as midline storage for just me.

So I thought I could enjoy it purely with NVMe, fast NICs, Switch, and so on with its own performance.

However, there is an unexpected problem, so I would like to get your opinion and help.

I actually thought there would be a problem with writing speed, but the real problem is reading speed.

If you tell me what I missed and what more I need to check, I'll try.

Good data stability, adequate performance. It utilized six 14TB SAS HDDs and other SLOG disks, and operated satisfactorily under 10G to 40G network configurations.

This time, I have configured a new system to configure all-flash storage, which I have been interested in and targeted for a long time.

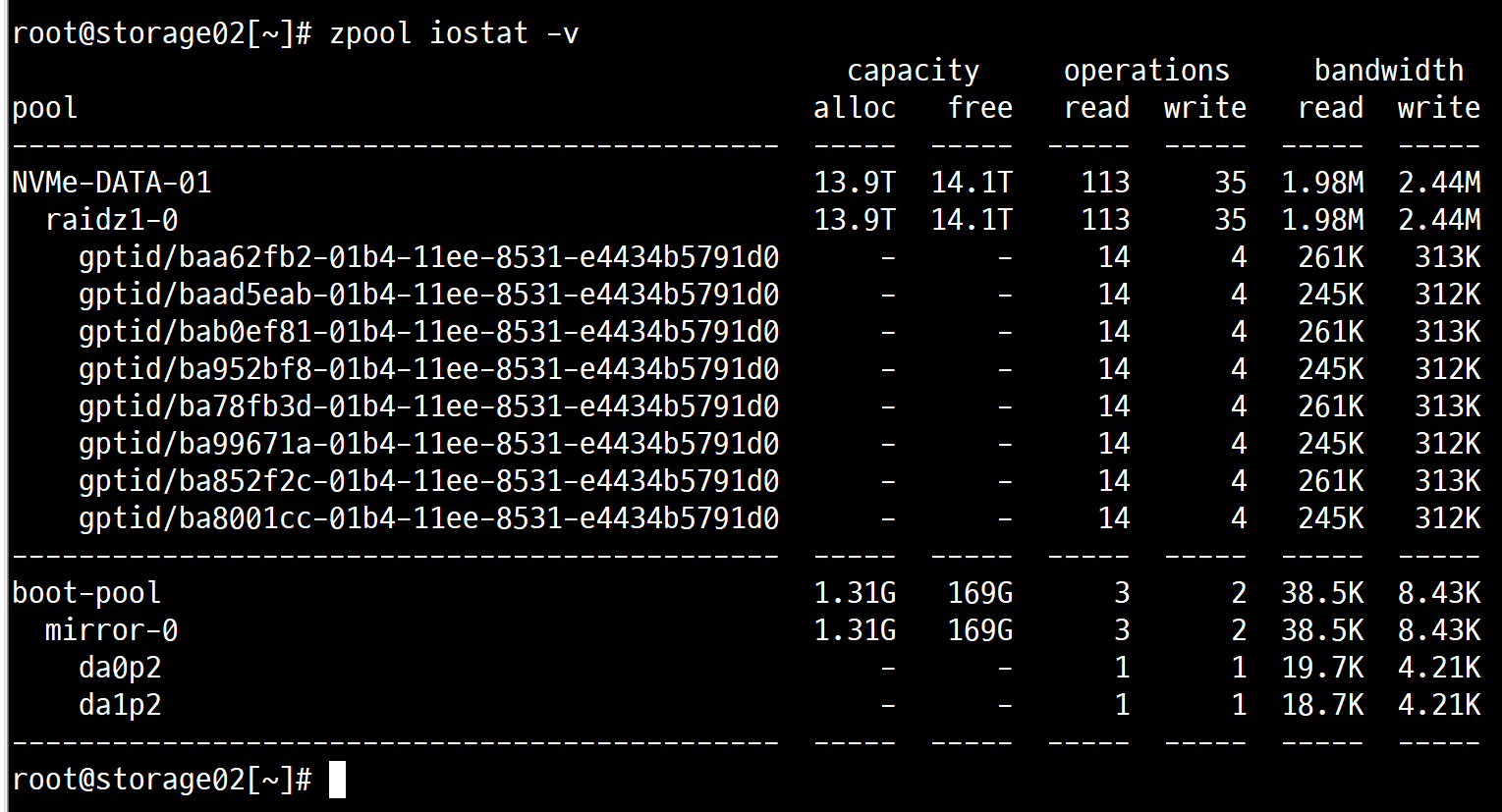

- System: Dell PowerEdge R640 10-Bay with NVMe enabled configuration

- DISK (for boot): 200GB SAS SSD x 2

- DISK (for DATA): 3.84TB U.2 NVMe x 8 (Micron 7300 Pro)

- MEM: 64GB ECC/REG

- CPU: Xeon Silver 4114 x 2 (2cpu configuration)

- NIC: Intel i350+X550 rNDC, Mellanox ConnectX-4 100GbE Dual Port

I left the rest of the settings as the default.

And the result is an unknown slow reading speed.

I'm not interested in benchmarks, so it's not a matter of that outcome.

I just replicated from the existing HDD-based Truenas system to the new all-flash system, and i are looking for the cause of this, showing 50 to 100 MB/s per second to read data from the client equipment after replication.

The client communicates with the system through a 10GbE NIC, and writes at a virtual maximum bandwidth of 10GbE.

Yes, it's about 1Gb/s per second and I know this is the result of write caching in RAM and NVMe's strengths working fantastically.

Now to find out why all-flash pools are slow to read, I spent about four days searching communities like Google and Truenas Forums, Reddit, etc., trying to find and analyze the cause, but I couldn't find it.

Search for data and cases for approximately 4 days and list possible causes.

1. ARC Issues

2. Low capacity RAM

3. Inconsistent ZFS and NVMe configurations

First, in the case of ARC, i checked that the hit ratio dropped during the reading operation. (Check on right end of graph)

And as expected, ZFS doesn't seem to be actively reading disks.

I tried to apply the Autotune function provided by Truenas, but it was not improved.

The test was to copy approximately 60GB of Zip files from the Pool to the client, and nothing else was done.

Actually, the system was originally installed on 'Scale', but when there was a problem with the reading speed, i reinstalled with 'Core'.

But I feel hopeless that the problem remains the same.

I using SMB and iSCSI. Both have the same problem.

I know that my configuration is lower than the usual enterprise-level specifications.

But most of my workloads are just storing cold data in a pool, sometimes browsing videos and photos.

I think it would be more appropriate to describe it as midline storage for just me.

So I thought I could enjoy it purely with NVMe, fast NICs, Switch, and so on with its own performance.

However, there is an unexpected problem, so I would like to get your opinion and help.

I actually thought there would be a problem with writing speed, but the real problem is reading speed.

If you tell me what I missed and what more I need to check, I'll try.

Last edited: